Keywords

Abstract

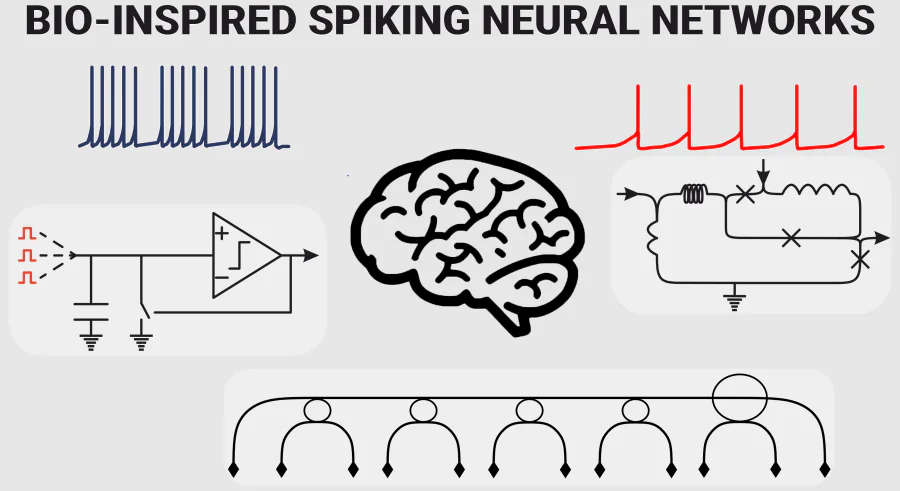

The extensive development of the field of spiking neural networks has led to many areas of research that have a direct impact on people’s lives. As the most bio-similar of all neural networks, spiking neural networks not only allow for the solution of recognition and clustering problems (including dynamics), but they also contribute to the growing understanding of the human nervous system. Our analysis has shown that hardware implementation is of great importance, since the specifics of the physical processes in the network cells affect their ability to simulate the neural activity of living neural tissue, the efficiency of certain stages of information processing, storage and transmission. This survey reviews existing hardware neuromorphic implementations of bio-inspired spiking networks in the ”semiconductor”, ”superconductor”, and ”optical” domains. Special attention is given to the potentials for effective ”hybrids” of different approaches.

List of main acronyms

AI: artificial intelligence;

ANN: artificial neural network;

CNN: convolutional neural network;

CMOS: complementary metal-oxide semiconductor;

DFB-SL: distributed feedback semiconductor laser;

HH: Hodgkin-Huxley;

ISI: inter-spike interval;

JJ: Josephson junction;

LIF: leaky integrate-and-fire;

NIST: National Institute of Standards and Technology;

ONN: optical neural network;

PCM: phase change materials;

QPSJ: quantum phase-slip junction;

RFF: receptive fields filter;

RSFQ: rapid singleflux-quantum;

SFQ: single-flux quantum;

SNN: spiking neural networks;

STDP: spike-timing dependent plasticity;

SQUID: superconducting quantum interference device;

VCSEL: vertical-cavity surface-emitting laser;

XOR: exclusive OR logical operator.

1. Introduction

The last decade has demonstrated a significant increase in interdisciplinary research in neuroscience and neurobiology (this was reflected even in the decisions of the Nobel Committee[1, 2] in 2024). The convergence of mathematics, physics, biology, neuroscience and computer science has led to the hardware realisation of numerous models that mimic the behaviour of living nervous tissue and reproduce characteristic neural patterns. Spiking neural networks (SNNs) have played a crucial role in these fields of knowledge. In these networks, neurons exchange short pulses (about 1-2 ms for bio-systems) of the same amplitude (about 100 mV for bio-systems).[3, 4] SNNs come closest to mimic the activity of living nervous tissue (capable of solving surprisingly complex tasks with limiting resources) and have the greatest biosimilarity and bioinspirability.

Spiking neural networks use a completely distinct method of information transfer between neurons: they encode input data as a series of discrete-time spikes that resemble the action potential of biological neurons. In fact, the fundamental idea of SNNs is to achieve the closest possible biosimilarity and use it to solve specific tasks. These problems can be roughly divided into two groups: the first group is focused on solving traditional neural network challenges, with more emphasis on dynamic information recognition (speech, video), while the second group is aimed at imitating the nervous activity of living beings, reproducing characteristic activity patterns, recreating the work of the human brain. Currently, there is an ambitious project that aims to create a full-fledged artificial mouse brain.[5] Moreover, the second group includes such tasks as: using motor biorhythms for neural control in robotics,[6-8] controlling human movements (bioprosthetics, functional restoration of mobility),[9] understanding learning processes and memory effects,[10-12] creating brain-computer interfaces,[13-17] etc.

Therefore, hardware development of bio-inspired SNN is very vital and promising. For this reason, it is crucial to recognise the current progress and conditions for the development of this field, taking into account the immense amount of information that is growing every day. Moreover, in terms of signalling, SNN is better suited to hardware implementation than artificial neural network (ANN), since neurons are only active at the time when a voltage spike is generated, which reduces the overall power consumption of the network and simplifies computation.

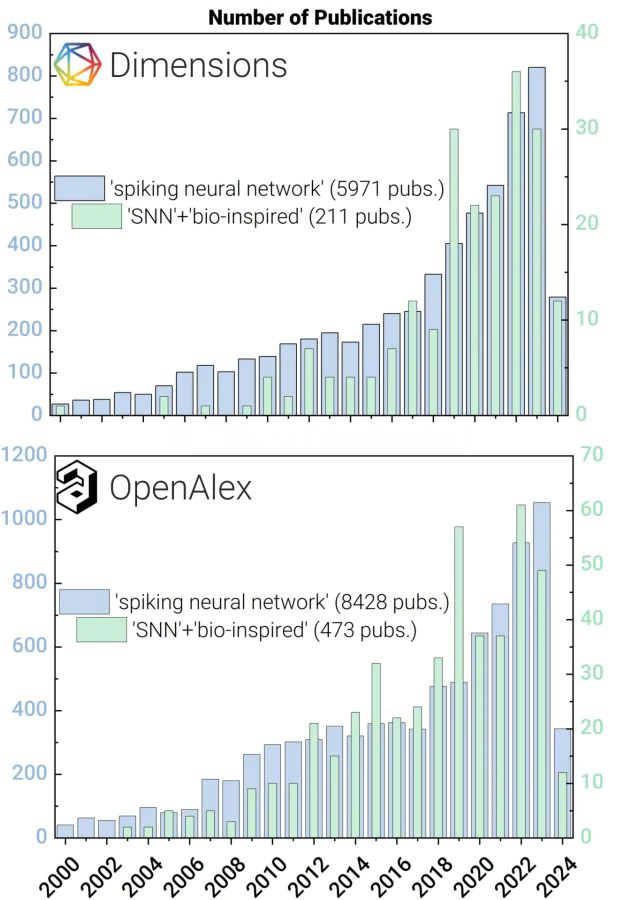

Figure 1 illustrates the intensification of work on the topic over the last decades. This is the period with a significant increase in publication activity. Here we show the analysis of publication activity (indexed in Dimensions and OpenAlex databases) on the topic of spiking neural networks and on the topic of spiking neural networks filtered by the keywords ’bio-inspired’. Since around 2016, there has been a significant increase in interest in the topic of spiking neural networks as well as in the topic of bio-inspired networks. All studies presented in these publications have been carried out for different implementations of SNNs: software, CMOS (especially memristorbased), superconducting, optical, and hybrid ones.

In this paper we review the advances in different hardware directions of bio-inspired spiking neural network evolution and provide a brief summary of the future of the field, its advantages, challenges, and drawbacks. Furthermore we have reviewed the basic software implementations of SNNs.

The first part of this review is devoted to the mathematical foundations of bio-inspired spiking neural networks, their idea and software realisation. The second part is dedicated to the CMOS-based bio-inspired neuromorphic circuits, where we have talked about semiconducting and memristive realisations. The third part is devoted to superconducting realisations, not of the whole network, but of its elements and some of its functional parts. Finally, we have considered bio-inspired elements for optical neuromorphic systems and provide a brief discussion of each area of activity as well as a general conclusion on the evolution of bio-inspired neuromorphic systems.

The brief conclusion is that there is no single approach that has overwhelming advantages at the current moment, and it is quite likely that the necessary direction of development of the field has not yet been found. However, we can already say with certainty that the hybrid approach can provide some success in the formation of complex deep spiking neuromorphic networks.

2. Mathematical fundamentals of bio-inspired spiking neural networks

A spiking neural network is fundamentally different from the second generation of neural networks: instead of continuously changing values over time, such a network works with discrete events (chains of events) that occur at specific points in time. Discrete events are encoded by impulses (spikes) received at the input of the neural network and processed by it in a specific way, as shown in Figure 2. The output of such a network is also a sequence of spikes, encoding the result of the network’s activity. In a real neuron, the transmission of impulses is described by differential equations that correspond to the physical and chemical processes of action potential formation. When the action potential reaches its threshold, the neuron generates a spike and the membrane potential returns to its initial level, see Figure 2a. An accurate representation of neuronal activity and its response to various input signals requires a general mathematical model that describes all the necessary processes associated with its spike activity and action potential formation, while remaining sufficiently simple for its successful use in various applications.

2.1. Mathematical models of bio-inspired neurons and networks

Hodgkin-Huxley model

The discussion of applications begins with the simplest and most studied software implementations of SNNs: the most popular way to describe the initiation and propagation of action potentials in neurons is the Hodgkin-Huxley (HH) model.[18] The HH model is treated as a conductance-based system where each neuron is a circuit of parallel capacitors and resistors[19] and describes how action potential in nerve cells (neurons) is emerged and propagated. Basically, the HH model contains[20] four components of the current flowing through the neuron membrane, formed by lipid bilayer and possessing of potential

Of course, the biochemical processes in the living nerve cell are more complicated and therefore the HH model could be modified by adding extra terms for other ions (Cl− or Ca++, for example) or by using non-linear conductance models (

Izhikevich model

One of the most computation-efficient and simultaneously accurately representative for neurons’ activity is the Izhikevich model. According to Ref. [23], bifurcation methodologies[24] enable us to reduce many biophysically accurate Hodgkin-Huxley-type neuronal models to two-dimensional (2D) system of ordinary differential equations

with the auxiliary after-spike resetting

Here,

Leaky Integrate-and-Fire Neuron Model

The really frequently used hardware model is implemented on principles of so-called Leaky Integrate-and-Fire (LIF) neurons. Such neurons, whose membrane potentials

where

is a function of the threshold voltage

2.2. Coding information in SNN

The brain of a living being processes a wide variety of information, which can come from different sensory organs as well as signals from the nervous system of different internal organs. How does the brain understand which signal comes from the optic nerve and which from the auditory nerve, for example? There are different ways of encoding incoming information, processing it by a specially trained neural network (a specific area in the brain) and interpreting the results of the processing. In other words, there are ways of encoding input and output signals. And both are very important: in the first case, the information is encoded so that the system can work with it, and in the second case, so that the general decision-making centre (the brain) can perceive or handle the processed information. Two types of coding are generally used in artificial SNNs: rate coding and temporal (or latency) coding, although there are others.[27]

Rate coding converts input information intensity into a “firing” rate or spike count. For example, a pixel in some image, that has a specific RGB code and brightness, can be converted or coded into a Poisson train – a sequence of spikes, based on this information (information of its intensity). There are several types of rate coding: count rate coding, density rate coding and population rate coding. In terms of output interpretation, the processing centre will select the one that has the highest “firing” rate or spike count at a particular point in time.

Another natural principle of information coding is a temporal (or latency) coding. One converts input intensity to a spike time, referring to spike timing and paying attention to time moment when the spike has occurred. The spike weighting ensures that different “firing” times lead to different amount of information. The earlier a spike arrives, the larger weight it carries, and the more information it transmits to the post-synaptic neurons. In terms of output interpretation, the processing centre selects the signal that, by a certain point in time, came first (selects the signal from that output neuron among other output neurons that fused first).

There is also another type of information coding called delta modulation. This type of coding consists of converting the incoming analogue information signal into a spike train of temporal changes in signal intensity (magnitude). For example, if the input signal is increasing, the network input will receive spikes at a certain frequency, which depends on the rate of increase of the signal (its derivative): the faster the signal increases in time, the more frequent the spikes is. Conversely, the signal decreasing will be accompanied by the absence of spikes.

2.3. SNN learning techniques

The complex dynamics of spike propagation over SNNs makes it difficult to design a learning algorithm that gives the best result. Currently, there are three main types of methods for training spiking neural networks, as referred in Ref. [27]: shadow training, backpropagation on spikes training and training based on local learning rules.

Shadow training

The idea of this shadow training technique is to use the training algorithms of a conventional ANN to build a spiking network by converting a trained ANN into a trained SNN. This process takes place as follows: first, the conventional ANN is trained, then the activation function of each neuron in the ANN is replaced by a separate operator that non-linearly transforms the signal incoming to the neuron by the spike frequency or by the delay between spikes. For example, conversion process from convolutional neural network to SNN can be done by manually reprogramming convolution kernel for spike train inputs in order to make the SNN produce the same output as the trained convolutional neural network (CNN).[28] At the same time, the net weights remain the same.

The main advantage of this training process is that the most of the time we deal with an ANN, with all of its benefits of training conventional neural networks. Such approach is used in tasks related to that of ANNs that aimed to image classification. Despite that, the process of converting activation functions into spike trains usually requires a large number of simulations time steps which may deteriorate the initial idea of spiking neuron energy efficiency. And, the most important one, is that conversion process is doing an approximation of activation, negatively affecting the performance of a SNN. The last further confirms the fact that other learning algorithms need to be developed to train SNNs, till then SNNs will remain just a shadow of ANNs.

Backpropagation training

Backpropagation training algorithm for ANNs can also be implemented for SNNs by calculating gradients of weight change for every neuron. Implementations of this algorithm for SNNs may vary since, according to original ANNs backpropagation the learning requires to calculate the derivative of the loss function. And as we know, the uses of derivative over spikes is not the best idea, because spikes generation depends on membrane threshold potential as a step-function and the derivative becomes infinite. To avoid this, backpropagation techniques for SNN take other neuron output signal parameters into account, i.e. backpropagation method using spikes utilises changes of spike timing rate according to the network weights change.

Backpropagation method has several advantages, such as the high performance on data-driven tasks, low energy consumption, and high degree of similarity with recurrent neural networks in terms of training process. Despite the similarity with well-established ANN backpropagation method, the drawbacks of backpropagation for SNNs method consist of several subjects. First of all, this method can not fully replicate effectiveness of optimising a loss function, meaning that there is still an accuracy gap between SNNs and ANNs, which remains to be closed up for today. Additionally, once neurons become inactive during the training process, their weights become frozen.

In some cases, there are alternative interpretations of this algorithm, for example, such as the forward propagation through time[29] (FPTT) which is used for recurrent SNN training. This algorithm is devoid of many drawbacks that follow conventional backpropagation algorithm, removing the dependence on partial gradients sum during the gradient calculation. The most peculiar feature of this algorithm is that along with regular loss it computes dynamic regularisation penalty, which is calculated on previously encountered loss value, transforming recurrent nertwork training to resemble feed-forward network training.

Local learning rules

Neurons are trained locally, treating local spatially and temporary signal as an input for a single neuron’s weight update function. Namely, backpropagation technique deals only with finite sequences of data, restricting the temporal dependencies that can be learned. This algorithm also tries to compute gradients as it is done in backpropagation, but does it through the computations that make these gradient calculations temporally local. However, this algorithm demands significantly more computations in comparison with backpropagation, which rejects the possibility of this algorithm to replace conventional backpropagation despite being more biologically plausible.

The constraint imposed on brain-inspired learning algorithms is that the calculation of a gradient should, like the forward pass, be temporally local, i.e. that they only depend on values available at either present time. To address this, learning algorithms turn to their online counterparts that adhere to temporal locality. Real-time recurrent learning[30] (RTRL) proposed back in 1989 is one prominent example.

2.5 Towards hardware implementations of SNN

To extend the understanding of the concept of how spiking neural networks work, in this section we will review a schematic process of solving the problem of exclusive OR (XOR) logical operator with a SNN on an example presented in Ref. [31]. Since XOR is considered a fairly simple logic gate, it will serve as an excellent demonstration of SNN training and operation. In fact, operating with SNNs has almost the same challenges as in training ANNs: for example, which data representation to choose to effectively describe the problem, what structure should the network have, how to interpret the network output and so on. That is, we should guide our approach to a certain problem in the same way as it is done in ANNs on the general scope, including the XOR problem.

The training process for the chosen task can be separated into several steps:

- Neuron model

Due to low complexity of this task, it is most efficient to use a simple neuron model as well. In that case, the LIF model suits this problem the best because its easy to control and does not produce unwanted complexity.

- Data representation

Since we test the possibility of creating a network of spiking neurons to perform logic gate operations, the input data can be simply labeled in two inputs. Each input has to carry its own representative frequency in order to be separable for the network. Taking these statements into account, we can (for example) define the frequency input values as 25 Hz for the Boolean Zero (“0”) and 51 Hz for the Boolean One (“1”). These values are chosen as they are decisively different from each other and can be clearly represented visually.

- Structure

The neural network structure represents the logic gate as follows: two input neurons that encode any combination of “0” and “1”, hidden layer neurons represent any possible combination of 0’s and 1’s, and output layer produces the predicted output, as shown in Figure 4.

- Receptive fields filter (RFF)

To strengthen the response for designated frequencies for the corresponding neurons, RFF is added to every hidden layer neurons’ connections. Adding the RFF helps neurons to detect particular frequencies, filtering the ones that are outside their response range. The RFF formula[31] is

- Input encoding

The input frequencies are encoded into linear spike trains, i.e. the value of the distance between the action potentials, known as the inter-spike interval (ISI), is treated to be a constant. The network was designed to take advantage of the precise timing between action potentials. If the ISIs on input A are synchronised with the ISIs on input B, it means that both inputs have identical frequency.

- Training

Usage of RFF is an effective and biologically plausible way to reduce complexity and fault vulnerability in this problem. Because of that, as well as small number of neurons, manual fine-tuning of thresholds and weights will be enough to train the network to produce accurate results, successfully recognising all possible XOR combinations. However, upon increasing the size of the network, implementing one of network training algorithms will be inevitable.

For conclusion to this example it is important to highlight that one should pay attention to training process workflow and investigate the problem deeply to be able to recognise all the obstacles that may be encountered during training (i.e. in the XOR problem proper signal frequency interpretation would not be possible without RFF). Considering the training workflow, the most important parts of this are the neuron model and input/ output coding, since it can determine crucial aspects of problem interpretation from network implementation’s point of view. Conversely, network training algorithms for weights optimisation play lesser role in this process, because they can only affect computational complexity to be performed and their influence on results accuracy becomes noticeable only if the task implies implementing state-of-art network or results close to it.

3. CMOS-based bio-inspired neuromorphic circuits

The last decades of electronics and electrical engineering are clearly associated with the development of the conventional complementary metal-oxide-semiconductor (CMOS) technology.[32, 33] Semiconductors are well established in many devices that we use 7 days a week, all year round. It is therefore not surprising to see variations in the hardware implementation of SNNs based on semiconductor elements. Globally, all CMOS SNN implementation options can be divided into two parts, with the focus on either transistors or memristors. Examples of semiconductor implementations of neuron circuits are shown in Figure 5. Two CMOS solutions for implementing the functions of the single neuron model developed by IBM (for TrueNorth) and Stanford University (for Neurogrid) are presented here. In both cases, the realisation of the functions of even a single neuron (more precisely, the neuron soma) requires the involvement of a large number of elements.

3.1. Silicon-based neuron: operation

As might be expected, the realisation of spike sequence generation in CMOS technology differs from the way it is done in software models. Since semiconductor circuits operate with voltage levels, generating a spike as a nonlinear voltage response requires some tricks. To better understand how SNNs work at the hardware level and how spike formation occurs, let us briefly review the operation of the basic elements of such networks, which are isomorphic to ion-gated channels.[32, 36]

Realisation of ion-gated mechanisms

The goal of creating semiconductor models of neuromorphic spiking neural networks is to reproduce the biochemical dynamics of ionic processes in living cells. The first step is to reproduce the ion-gated channels responsible for the different voltage response patterns. The HH neuron model discussed above is essentially a thermodynamic model of ion channels. The channel model consists of a number of independent gating particles that can adopt two states (open or closed) which determine the permeability of the channel. The HH variable represents the probability that a particle is in the open state or, in population terms, the fraction of particles in the open state.

In a steady state, the total number of opening particles (the opening flux, which depends on the number of closed channels and the opening rate) is balanced by the total number of closing particles (the closing flux). A change in the membrane voltage (or potential) causes an increase in the rate of one of the transitions, which in turn causes an increase in the corresponding flux of particles, thereby modifying the overall state of the system. The system will then reach a new steady state with a new ratio of open to closed channels, thus ensuring that the fluxes are once again in equilibrium.

This situation becomes even clearer if we consider it from the perspective of energy balance. Indeed, a change in the voltage on the membrane is equivalent to a change in the electric field across it. Then the equilibrium state of the system depends on the energy difference between the particles in the different states: if this difference is zero and the transition rates are the same, the particles are equally distributed between the two states. Otherwise, the state with lower energy will be preferred and the system will tend to move to it. Note that in the HH model, the change in the population of energy states is exponential in time. A similar situation is with the density of charge carriers at the source and drain of the transistor channel, the value of which also depends exponentially on the size of the energy barriers. These energy barriers exist due to the inherent potential difference (electric field) between the channel and the source or drain. Varying the source or drain voltage changes the energy level of the charge carriers.

The similarity of the physics underlying the operation of neuronal ion channels and transistors allows us to use transistors as thermodynamic imitators of ion channels. In both cases, there is a direct relationship between the energy barrier and the control voltage. In an ion channel, isolated charges must overcome the electric field generated by the voltage across the membrane. For a transistor, electrons or holes must overcome the electric field created by the voltage difference between the source, or drain, and the transistor channel. In both of these cases, the charge transfer across the energy barrier is governed by the Boltzmann distribution, resulting in an exponential voltage dependence.[37] To model the closing and opening fluxes, we need to use at least two transistors, the difference of signals from which must be integrated to model the state of the system as a whole. A capacitor (

Integrate-and-fire neuron realisation

Now we are ready to understand the membrane voltage and spike formation. The explanation of these processes will be conducted based on a model of an integrate-andfire neuron realised using MOSFETs (

The presented neuron circuit operation begins with the flow of synaptic current pulses (step 1) from presynaptic devices into the neuron circuit. Charges carried by the input current are integrated into

Figure 7b illustrates the manner in which the membrane current changes with the membrane voltage during the charging and discharging of

Izhikevich neuron model with silicon-based realisation

In 2008 Wijekoon and Dudek [39] suggested the circuit that implements the cortical neuron (Figure 8), inspired by the mathematical neuron model proposed by Izhikevich in 2003. The circuit contains 14 MOSFETs, based on which three blocks are operating: membrane potential circuitry (transistors

The slow variable circuit works as follows. The magnitude of the current supplied by

In the comparator circuit, the voltage

The basic circuit comprises 202 neurons with different circuit parameters, including transistor and capacitance sizes. Fabrication was conducted using 0.35 µm CMOS technology. Since the transistors in this circuit operate mainly in strong inversion mode, the excitation patterns are on an ”accelerated” time scale, about 104 faster than biological real time. The power consumption of the circuit is less than 10 pJ per spike.[32, 39] Main types of characteristic firing patterns of suggested circuit are demonstrated in Figure 9.

3.2 Transistor-based realisations

This section is devoted to reviewing such significant projects as SpiNNaker, Neurogrid, TrueNorth, NorthPole and Loihi. And despite the fact that at the moment of IBM’s chip creation there were already such projects as SpiNNaker[40] (2012) and Neurogrid[35] (2014), we will start with the review of the TrueNorth neuromorphic processor, since in our opinion it was the first hardware implementation of the idea of neuromorphic computing.

TrueNorth

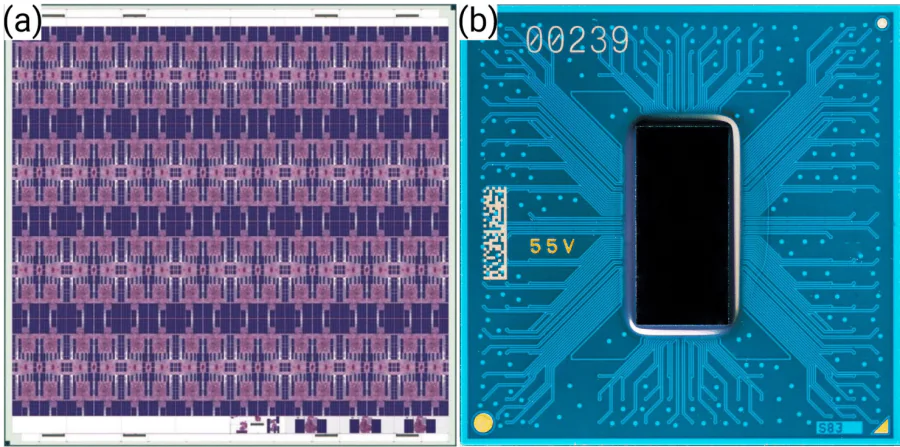

The field of brain-inspired technologies was marked in 2015 by the release of the first neuromorphic chip: IBM TrueNorth (Figure 10a), a neuromorphic CMOS integrated circuit. The TrueNorth chip architecture is based on an organic neurobiological structure, but with the limitations of inorganic silicon technology. The main purpose of this platform was to reproduce the work of existing neural network algorithms of speech and image recognition in real time with minimal energy consumption.[34] This neuromorphic chip contains 4096 neurosynaptic nuclei combined into a two-dimensional array, and contains a total of 1 million neurons and 256 million synapses. The chip has a peak computing performance of 58 GSOPS with a power consumption of 400 GSOPS/W (here GSOPS means Giga-synaptic operations per second).

The use of the term ”neuromorphic” itself implies that the chip is based on an architecture that differs from the familiar von Neumann architecture. Unlike von Neumann machines, the TrueNorth chip does not use sequential programs that map instructions into linear memory. The chip consists of spiking neurons, which are connected in a network and communicate with each other via spikes (voltage pulses). Communication between neurons is tunable, and the data transmitted can be encoded by the frequency, temporal and spatial distribution of the spikes. TrueNorth is designed for a specific set of tasks: sensory processing, machine learning and cognitive computing. However, just as in the early days of the computer age and the emergence of the first computer chips, the challenges of creating efficient neurosynaptic systems and optimising them in terms of programming models, algorithms and architectural features are still being solved. Currently, the IBM Truenorth chip is being used by DARPA (Defense Advanced Research Projects Agency) for gesture and speech recognition.

An IBM Research blog post on TrueNorth’s performance notes that the classification accuracy demonstrated by the system is approaching the performance of 2016 state-of-art implementations, not only for image recognition but also for speech recognition. The[41] reports performance data for five digital computing architectures running deep neural networks. A single TrueNorth chip processes 1200-2600 32 × 32 colour images per second, consuming 170-275 mW, yielding an energy efficiency of 6100-7350 FPS (here FPS means frames per second). TrueNorth multi-chip implementations on a single board process 32×32 color images at 430-1330 per second and consume 0.89-1.5W, yielding an energy efficiency of 360- 1420 FPS/W. SpiNNaker delivers 167 FPS/W while processing 28×28 grayscale images (in a configuration of 48 chips on one board).[42] Tegra K1 GPU, Titan X Graphics processing unit (GPU) and Core i7 CPU deliver 45, 14.2 and 3.9 FPS/W, respectively, while processing 224×224 color images.[41, 43]

SpiNNaker

The SpiNNaker (Spiking Neural Network Architecture) project, the brainchild of the University of Manchester, was unveiled in January 2012. It is a real-time microprocessor-based system optimised for the simulation of neural networks, and in particular spiking neural networks. Its main purpose is to improve the performance of software simulations.[40, 44] SpiNNaker uses a custom chip based on ARM cores that integrates 18 microprocessors in 102 mm2 using a 130 nm process. The all-digital architecture uses an asynchronous message-passing network (2D torus) for inter-chip communication, allowing the whole system to scale almost infinitely. In experiments[42, 45, 46] a 48-chip board (see Figure 10d) was used, which can simulate hundreds of thousands of neurons and tens of millions of synapses, and consumes about 27-37W in real time (for different neuron models a network configuration). On average, 2000 spikes formed into a Poisson train were used to encode a digit character from the MNIST dataset, with a classification latency of 20 ms.

In summary, SpiNNaker is a high-performance, application-specific architecture optimised for tasks from neurobiology and neuroscience in general. It is claimed that the system can also be used for other distributed computing applications such as ray tracing and protein folding.[45] The experimental studies performed suggest that for parallel modelling of deep neural networks, a dedicated multi-core architecture can indeed be energy efficient (compared to competitors and general purpose systems) while maintaining the flexibility of software-implementable models of neurons and synapses.

In 2021 the second generation of neuromorphic chip by the collaboration of Technische University of Dresden and University of Manchester was released – SpiNNaker2. Its development was conducted within the European Union flagship project “Human Brain Project”. The overall approach utilised in the creation of SpiNNaker2 has the following parts: keep the processor-based flexibility of SpiNNaker1, don’t do everything in software in the processors, use the latest technologies and features for energy efficiency and allow workload adaptivity on all levels.[44] One SpiNNaker2 chip consists of 152 advanced RISC machines (ARM) processors (processing elements) arranged in groups of four to quad-processing-elements which are connected by a Network-on-Chip (NoC) to allow scaling towards a large neuromorphic System-on-Chip (SoC). The full-scale SpiNNaker2 will consist of 10 million ARM cores distributed across 70000 chips in 10 server racks. We’d also highlight that the second generation is designed on a different technical process. Specifically, SpiNNaker1 was realised on 130 nm CMOS, while SpiNNaker2 was realised on 22 nm fully depleted silicon on insulator (FDSOI) CMOS,[47] which allowed not only to increase the performance of a single chip as a whole, but also to improve power efficiency. It is stated that SpiNNaker2 enabling a 10× increase in neural simulation capacity per watt over SpiNNaker1. Among the potential applications for the SpiNNaker2 the following stand out: naturally, brain research and whole-brain modeling, biological neural simulations with complex plasticity rules (with spike-timing-dependent plasticity (STDP), for example), low-power inferencing for robotics and embedded artificial intelligence (AI), large-scale execution of hybrid AI models, autonomous vehicles, and other real-time machine learning applications.

Neurogrid

The Neurogrid project, carried out at Stanford University in December 2013,[48] is a mixed analogue-digital neuromorphic system based on a 180 nm CMOS process. The project has two main components: software for interactive visualisation and hardware for real-time simulation. The main application of Neurogrid is the real-time simulation of large-scale neural models to realise the function of biological neural systems by emulating their structure.[35]

The hardware part of Neurogrid contains 16 Neurocores connected in a binary tree: microelectronic chips with a 12 × 14 mm2 die containing 23 million transistors and 180 pads; a Cypress Semiconductor EZ-USB FX2LP chip that handles USB communication with the host; a Lattice ispMACH CPLD. This makes it possible to establish a link between the data transmitted via USB and the data driven by Neurogrid, and to interleave timestamps with the outgoing data (host binding). A daughter board is responsible for primary axonal branching and is implemented using a field-programmable gate array (FPGA). The Neurogrid board is shown in Figure 10c. Each Neurocore implements a 256 × 256 silicon neuron array, and also contains a transmitter, a receiver, a router, and two RAMs. A neuron has one soma, one dendrite, four gating variables and four synapse populations for shared synaptic and dendritic circuits. [35]

The Neurogrid’s architecture enables to simulate cortical models emulating axonal arbors and dendritic trees: a cortical area is modeled by a group of Neurocores mentioned above and an off-chip random-access memory is programmed to replicate the neocortex’s function-specific intercolumn connectivity.[49] Therefore, it is indeed simulate the behaviour of large neural structures, including conductance-based synapses, active membrane conductances, multiple dendritic compartments, spike backpropagation, and cortical cell types. No data is available on the power consumption of image recognition, as in the case of TrueNorth or SpiNNaker, but that’s understandable: the main purpose of the project is to model the neural activity of living tissues, and that’s what it does. One thing we can say for sure is that the neurogrid consumes less power than the GPU for the same simulation: 120 pJ versus 210 nJ per synaptic activation.[50] This confirms the thesis that digital simulation (on CPUs or GPUs), even though it allows solving such problems, but the time and power consumption will be significant compared to specialised architectures.

NorthPole

A striking example of a hardware implementation of a semiconductor bio-inspired neural network is the recently (2023) introduced NorthPole chip from IBM[51] (Figure 10b). NorthPole is an extension of TrueNorth, and it’s not surprising that it inherits some of the technology used there. The NorthPole architecture is designed for low-precision, common-sense computing while achieving state-of-the-art inference accuracy for neural networks. It is optimised for 8-, 4- and 2-bit precision, eliminating the need for high precision during training. The NorthPole system consists of a distributed modular array of cores, with each core capable of massive parallelism, performing 8192 2-bit operations per cycle. Memory is distributed between and within the cores, placing it in close proximity to the computation. This proximity allows each core to take advantage of data locality, resulting in improved energy efficiency. NorthPole also incorporates a large on-chip memory area that is neither centralised nor hierarchically organised, further enhancing its efficiency.

Potentially, the NorthPole chip opens up new ways for the development of intelligent data processing for tasks such as optimisation (of systems, algorithms, scalability, etc.), for image processing for digital machine vision, and data recognition for autopilots, medical applications, etc. Also the chip was run in such well-known tests as ResNet-50 image recognition and YOLOv4 object detection models, where it showed outstanding results: higher energy and space efficiency, and lower latency than any other chip available on the market today, and is roughly 4000 times faster than TrueNorth (since the requirements to the accuracy of calculations in the chip are reduced, it is not possible to correctly estimate the specific performance). Ref.[51] provides the following data: NorthPole based on 12 nm node processing technology delivers 5 times more frames per joule than GPU NVIDIA H100 based on 4 nm technological process (571 vs. 116 frames/J) and 1.5 times more than the specialised for neural network use Qualcomm Cloud AI 100 based on 7 nm technological process. The reason for this is the locality of the computation – by eliminating off-chip memory and intertwining on-chip memory with compute memory, the locality of spatial computation is ensured and, as a result, energy efficiency is increased. Also low-precision operations further increase NorthPole’s lead over its competitors.

Modha et al. [51] tested NorthPole only for use in computer vision. However, with this sort of potential, this chip can also be used for image segmentation and video classification. According to the information on the IBM’s blog it was also tested in other areas, such as natural language processing (on the encoder-only BERT model) and speech recognition (on the DeepSpeech2 model).

Loihi and Loihi2

In 2018, Intel Labs unveiled the first neuromorphic manycore processor that enables on-chip learning and aims to model spiking neural networks in silicon. The name of this processor is Loihi (Figure 11a). Technologically, Loihi is a 60 mm2 chip manufactured in Intel’s FinFET 14 nm process. The chip instantiates a total of 2.07 billion transistors and 33 MB of static random access memory (SRAM) across its 128 neuromorphic cores and three x86 cores to manage the neuro cores and control spike traffic in and out of the chip. It supports asynchronous spiking neural network models for up to 130000 synthetic compartmental neurons and 130 million synapses. Loihi’s architecture is designed to enable the mapping of deep convolutional networks optimised for vision and audio recognition tasks. Loihi was the first of its kind to feature onchip learning via a microcode-based learning rule engine within each neural core, with fully programmable learning rules based on spike timing. Intel’s chip allows the SNN to incorporate: 1) stochastic noise, 2) configurable and adaptable synaptic, axonal and refractory delays, 3) configurable dendritic tree processing, 4) neuron threshold adaptation to support intrinsic excitability homeostasis, and 5) scaling and saturation of synaptic weights to support “permanence” levels beyond the range of weights used during inference.[52, 53]

The on-chip learning is organised in such a way that the minimum of the loss function over a set of training samples is achieved during the training process. Also, learning rules satisfies the locality constraint: each weight can only be accessed and modified by the destination neuron, and the rule can only use locally available information, such as the spike trains from the pre-synaptic (source) and post-synaptic (destination) neurons. Loihi was the first fully integrated digital SNN chip that supported diverse local information for programmable synaptic learning process such as: 1) spike traces corresponding to filtered presynaptic and postsynaptic spike trains with configurable time constants, 2) multiple spike traces for a given spike train filtered with different time constants, 3) two additional state variables per synapse, besides the normal weight, to provide more flexibility for learning and 4) reward traces that correspond to special reward spikes carrying signed impulse values to represent reward or punishment signals for reinforcement learning.[52]

In Intel Labs original work the performance of Loihi was checked on theleast absolute shrinkage and selection operator (LASSO) task (l1-minimising sparse coding problem). The goal of this task is to determine the sparse set of coefficients that best represents a given input signal as a linear combination of features from a feature dictionary.[52] This task was solved on a 52 × 52 image with a dictionary of 224 atoms and during that the Loihi allowed to provide 18 times compression of synaptic resources for this network. The sparse coding problem was solved to within 1% of the optimal solution. Unfortunately, the article does not provide data on energy efficiency and speed of the chip when solving this problem. A bit later in[53] the classical classification task on modified NIST dataset was solved with accuracy of 96.4%.

In 2021 Intel Labs introduced their second-generation neuromorphic research chip Loihi 2 (Figure 11b), as well as the open-source software framework LAVA for developing neuro-inspired applications. Based on the Loihi experience, Loihi 2 supports new classes of neuro-inspired algorithms and applications, while providing up to 10 times faster processing, up to 15 times greater resource density with up to 1 million neurons per chip, and improved energy efficiency.[54] Loihi 2 has the same base architecture as its predecessor Loihi, but with new functionality and improvements. For example, the new chip supports fully programmable neuron models with graded spikes (Loihi supported only generalised LIF neuron model with binary spikes, whereas Loihi 2 supports any models) and achieves a 2× higher synaptic density. Ref. [55][56] reports a comparison of the performance and energy efficiency of the Loihi 2 chip compared to the performance of the the NVIDIA Jetson Orin Nano on video and audio processing task. Computing neuromorphic systems based on the Loihi 2 provide significant gains in energy efficiency, latency, and even throughput for intelligent signal processing applications (such as navigation and autopilot systems, voice recognition systems) compared to conventional architectures. For example, the Loihi 2 implementation of the Intel NSSDNet (Nonlocal Spectral Similarity-induced Decomposition Network) increases its power advantage to 74× compared to NsNet2 (Noise Suppression Net 2) running on the Jetson Orin Nano platform. The Loihi 2 has also demonstrated their advantages in Locally Competitive Algorithm implementation.[56]55 Loihi 2 is also capable of reproducing bio-realistic neural network implementation and it is flexible in terms of supporting different neuron models. Ref. [57] demonstrates a showcase of implementing a simplified bio-realistic basal ganglia neural network that carries “Go/No-Go” task, by using Izhikevich neurons.

3.3 Memristor-based realisations

A memristor is the fourth passive element of electrical circuits. This element is a two-terminal device, the main property of which is the ability to memorise its state depending on the applied bias current. Theoretically proposed[58] by Leon Chua in 1971, the memristor has seen the daylight as a prototype (memory + resistor) based on a thin film of titanium dioxide in 2008 thanks to HP Labs company.[59] The reason for the development of this device in the first place was the following problem: to further improve the efficiency of computing, electronic devices must be scalable to reduce manufacturing costs, increase speed and reduce power consumption – that is, more and more transistors must be placed on the same area of the crystal each time. However, due to physical limitations and rising manufacturing costs when moving to new process standards (to a 10 nm process, for example), the processing nodes of a traditional CMOS transistor can no longer scale cost-effectively and sustainably. As a result, new electronic devices with higher performance and energy efficiency have become necessary to satisfy the needs of the ever-growing information technology market,[60]60 implementing new “non-von Neumann” paradigms of in-memory computation.[61, 62]

Initially, memristive systems acted as elements of energy-efficient resistive random-access memory memory (RRAM) by using two metastable states with high and low resistance, switching between which is carried out by applying an external voltage. However, in recent years, the potential application of memristors can be used to realise the functions of both synapses and neurons in both ANNs and SNNs.[63] Figure 12 shows one of the realisations of a memristor-based neuron. It exploits the diffusion processes between two types of layers: dielectric SiOxNy:Ag layer (doped with Ag nanoclusters) and Pt layer.[64] The SiOxNy:Ag material serves as the functional part of the memristor, allowing the creation of a model of the leaky integrate-and-fire neuron. The diffusive memristor integrates the presynaptic signals (arriving at one of the Pt electrodes) within a time window and transitions to a low resistance state only when a threshold is reached.

Using nanotechnology capabilities, it is possible to miniaturise memristive electronic devices to units of nanometers, which allows achieving a high density of elements on a chip. An important advantage of memristors is their compatibility with CMOS technology: memristors can be organised fairly easily into a crossbar architecture that takes advantage of parallel in-memory computing. With nanoscale, two-terminal and semiconductor memristor, memristor crossbars are characterised by high element density and better energy efficiency than their transistor counterparts. Due to this, this approach makes it possible to implement memristive networks based on 2D- and 3D-integrated crossbar structures to increase the speed of signal transmission.[65-70] This is why memristor crossbars are seen as a good candidate as a basis for neuromorphic networks.[71]

It is assumed that due to similar mechanisms of ion exchange in biological nerve cells and memristors, the last ones can mimic synapses and even neurons with sufficiently high accuracy. Since the capabilities of a neural network are usually determined by its size (number of neurons and inter-neuron connections), a scalable and energy-efficient component base is required to create a more powerful and energy-efficient system.[72, 73] There have been many works aimed at studying the properties of memristors in terms of their application as synaptic elements and neurons, and there have even been successes in demonstrating the effect of synaptic plasticity[74] and the operation of the integrate-and-fire neural model.[75] Also an artificial neuron based on the threshold switching and fabricated on the basis of NbOx material, has been demonstrated. Such a neuron displays four critical features: threshold-driven spiking, spatiotemporal integration, dynamic logic and gain modulation.[76]

The dynamic characteristics of memristors in combination with their nonlinear resistance allow us to observe the responses of the system to external stimulation.[77] At the same time, the stochastic properties of memristors due to interaction with the external environment[78] can be used both for controlling metastable states and for hardware design of SNN circuits.[79] The first experimental prototypes of SNNs based on memristors and CMOS have already been created (synapses are realised via memristor crossbars, and neurons - via semiconductor transistors) and they can “to a certain extent” emulate spike-timing dependent plasticity.[80, 81] However, they are based on a simplified concept of synaptic plasticity based on overlapping pre- and postsynaptic adhesions,[81] which has led to reduced energy efficiency and rather complex technological design of SNN design circuits. Currently, approaches to overcome these problems are being developed, for example, on the basis of complete rejection of analoguedigital and digital-analogue transformations and creation of neuromorphic systems in which all signal processing occurs in analogue form[82] or by creating the concept of self-learning memristor SNNs.

Unfortunately, to realise a fully-memristive neuromorphic neural network it is necessary to achieve a non-linear dynamic signal processing. This challenge has so far not overcome by memristors alone, and every memristive neural network proposal relies on the operation of classical semiconductor transistors in one way or another. Below we consider two interesting realisations of CMOS memristive neural networks.

Neuromorphic network based on diffusive memristors

In 2018 Wang et al. presented an artificial neural network implemented on diffusive memristors (and also on transistors) that is capable to solve pattern classification task with unsupervised learning.[64] Note that the network demonstrated in the paper, recognised only four letters (”U”, ”M”, ”A”, ”S”), presented as 4-by-4 pixel images. Nevertheless, a working memristive network is a significant achievement and shows the feasibility in principle of the idea of memristor-based neuromorphic hardware computing. In Figure 12 demonstrated the central part of this network and artificial neuron in particular – diffusive memristor consisted of a SiOxNy:Ag layer between two Pt electrodes and serving as the neuron’s soma.

The most important difference between a diffusion memristor and a traditional memristor is that once the voltage is removed from the device terminals, it automatically returns to its original high-resistance state. The dynamics of the diffusion process in a diffusion memristor has similar physical behaviour to biological Ca2+ dynamics, which can accurately mimic different temporal synaptic and neuronal properties.[72]

The diffusive memristor in the artificial neuron is very different from non-volatile drift memristors or phase-change memory devices used as long-term resistive memory elements or synapses. The point is that the memristor processes the incoming signals within a certain time window (characteristic time, which in the diffusive memristor model is determined by the Ag diffusion dynamics to dissolve the nanoparticle bridge and return the neuron to its resting state) and then, only when a threshold has been reached, transitions to a low-resistance state. Figure 13a shows an integrated chip of the memristive neural network,[64] consisting of a one-transistor–one-memristor (Figure 13b,c) synaptic 8-by-8 array and eight diffusive memristor neurons (Figure 13d,e). The synapses were created by combining drift memristors with arrays of transistors. In this configuration, each memristor (i.e., the Pd/HfO2/Ta structure) is connected to a series of n-type enhancement-mode transistors. When all the transistors are in an active state, the array functions as a fully connected memristor crossbar.

The input images are divided into four 2-by-2 pixel subsets, where each pixel is assigned a specific pair of voltages (equal in modulus but different in sign), depending on the colour and intensity of the pixel. Each resulting subset is expanded into a single-column input vector comprising eight voltages. This input vector is then applied to the network, which consists of eight rows, at each time step. For each possible subset, there is a corresponding convolution filter implemented using eight memristor synapses per column. In total, there are eight filters in an 8 × 8 array. The convolution of the eight filters for each sub-image simultaneously results in the ”firing” of the corresponding neurons, which fulfil the role of ReLU. Eight outputs of these ”ReLUs” coupled with the 8-by-3 fully connected memristive crossbar, whose fan-out concentrates all spikes on the last layer of the neural network consisting of 3 neurons ”firing” which corresponds to a certain recognised image. It is claimed that the neuromorphic nature of the presented network is ensured due to the realisation of the effect of STDP used in the learning process and reflected in the change in the resistance of memristors in the crossbar when the next voltage pulse is passed. Despite the insufficient (in our opinion) representativeness of the learning results, the network architecture and the physics behind the used devices do not allow us to doubt the presence of the STDP effect, considering that similar effects were observed in memristors back in 2015.[83]

STDP learning in partially memristive SNN

The next realisation of the memristive-based neural network is proposed by Prezioso et al. [80], who experimentally demonstrated operation and STDP learning in SNN, implemented with the passively integrated (0T1R) memristive synapses connected to a silicon LIF neuron. The experimental setup consisted of 20 input neurons connected via 20 memristive crossbar-integrated synapses to a single LIF neuron. The input neurons – one neuron for each row of the memristive crossbar – are implemented using the off-the-shelf digital-to-analogue converter circuits. Synapses are implemented by Pt/Al2O3/TiO2−x/Pt based memristors in a 20 × 20 crossbar array. In this SNN implementation there is only a single LIF output neuron that connected to the third array column, while the other columns are grounded. LIF neuron is also realised on the custom-printed circuit board (see Figure 14). This arrangement allows connecting the crossbar lines either to the input/output neurons during network operation or to a switch matrix, which in turn is connected to the parameter analyser, for device forming, testing, and conductance tuning. Once the threshold is reached, the LIF neuron fires an arbitrary waveform generator. The characteristic duration of voltage spikes is on the order of 5 ms.

The neural network was trained to solve the coincidence detection problem (the task of identifying correlated spiking activity) using the STDP learning mechanism, which allows training a single neuron to produce an output pulse when it receives simultaneous (i.e., correlated) pulses from multiple input neurons. The relevance of such a task is due to the fact that the coincidence detection mechanism is an integral part of various parts of the nervous system, such as the auditory and visual cortex, and is generally believed to play a very important role in brain function.[84-86]

The conducted experiment has confirmed one of the main challenges for SNN implementation with memristors – their device-to-device variations. This imposes its own limitations on STDP learning, where it is important to consider certain time intervals (windows). Ideally, all synapses should be identical, and conductance updates for them should occur in equal STDP windows. In reality, however, conductance updates differ significantly between different memristive synapses, precisely because of differences in device switching thresholds. SNNs usually operate with spikes of the same magnitude, which allows to realise parallel updating of weights in several devices in crossbar circuit designs. In this experiment, the magnitude of the spikes was chosen based on the average switching threshold of the devices. Therefore, the change of conductivity in devices with a larger switching threshold was naturally smaller.

So, CMOS models provides great integration capabilities, while at the same time requiring high power supply and a large number of auxiliary elements to achieve biorealism. Also for this technology it is still difficult to achieve high parallelism in the system due to significant interconnect losses. Meanwhile, important applications based on semiconductor neuromorphic chips are already being solved, and within the framework of the “Human Brain Project”, the second generation of the spiking neural network architecture SpiNNaker has even been created. Creating and advancing higher performance and energy efficient semiconductor chips is a very important issue. There are broad areas where these devices may be in high demand, primarily because of the possibility of mobile deployment from smart bioprostheses and braincomputer interfaces to “thinking” robot-androids.

Memristors can achieve bio-realism with fewer components (the ionic nature of their functioning is similar to that in neurons), but the technology of their manufacturing has not yet been refined and the problem of device-to-device variations has not been solved. Therefore, at the moment, we should assume that memristors will be an auxiliary tool for semiconductor technology. It is not realistic to create a fully memristive neuromorphic processor separately, but maybe it is not necessary?

At the device level, the energy required for computation and weight updates is minimal, as everything is rooted in the presence or absence of voltage spike. At the architecture level, computation is performed directly at the point of information storage (neuromorphism and rejection of von Neumann architecture), avoiding data movement as is the case in traditional digital computers. In addition, potentially memristor networks have the ability to directly process analogue information from various external sensors and sensing devices, which will further reduce processing time and power consumption. The superconductor element base used to build neuromorphic systems, discussed below, has the same capability. Experimental implementation of large memristor neural networks used for working with real data sets is at an early stage of development compared to CMOS analogues.[72] And the device-to-device variation in memristors’ switching thresholds is still the major challenge.

4. Superconductor-based bio-inspired elements of neural networks

Superconducting digital circuits are also an attractive candidate for creating large-scale neuromorphic computing systems.[87-89] Their niche is in tasks that require both high performance and energy-efficient computing. Modern superconducting technologies make it possible to perform logical operations at high frequencies, up to 50 GHz, with energy consumption in the order of 10−19 − 10−20 J per operation.[87, 90-94] Superconducting devices[95] lend themselves to the creation of highly distributed networks that offer greater parallelism than the conventional approaches mentioned above. For example, in a crossbarbased synaptic network, the resistive interconnection leads to performance degradation and self-heating. In contrast, the zero-dissipation superconducting interconnection at cryogenic temperatures provides a way to limit interconnection losses.

The main problem with superconductor-based technologies is their relatively low scalability. Recently, the size of superconducting logic elements, the main part of which is the Josephson junction (JJ),[96, 97] is about 0.2 µm2 (approximately 107 JJs per cm2 ).[98-100] This value is comparable to the size of a transistor at 28 nm process technology in 2020. At the same time, superconducting hardware has a competitive advantage over CMOS technology due to its ability to exploit the third dimension in chip manufacturing processes. Incorporating vertically stacked Josephson inductors fabricated with self-shunted Josephson junctions in single-flux-quantum (SFQ) based circuits would increase circuit density with minimal impact on circuit margins.[101] In addition, the operating principles of Josephson digital circuits, which manipulate magnetic flux quanta with associated picosecond voltage pulses, are very close to the ideas of spiking neural networks.

Further development of the superconducting implementation of neuromorphic systems thus offers the prospect of creating a neuronet that emulates the functioning of the brain with ultra-high performance, and at the same time the neurons themselves prove to be relatively compact, since it is enough to use only a few heterostructures for the proper functioning of the cell.

The design of neurons and synapses using Josephson junctions and superconducting nanowires is discussed in the following subsections.

4.1. Implementations based on Josephson junctions

This approach is based on the quantisation of magnetic flux in superconducting circuits. Moreover, the flux quantum[102-104] can only enter and exit through a weak link in the superconductor: the Josephson junction, an analogue of an ion-permeable pore in the membrane of a biological neuron. This fact is important because studies of neural systems focus mainly on studying and reproducing the behaviour of neurons, including complex patterns of neuronal activity, their ”firings”. Just as biological neurons have a threshold voltage above which an action potential is generated, a Josephson junction has a threshold current, the Josephson junction critical current,

One of the most common ways to describe Josephson junctions is the resistively shunted junction model, where the junction can be replaced with an equivalent circuit of three parallel elements: a Josephson junction, a resistor and a capacitor.[93] The current through the Josephson junction can be written as the sum of three currents:

where

where

This equation is identical to that of a forced damped pendulum, where the first term gives the torque due to a “gravitational” potential, the second term is the damping term and the third term is the kinetic energy with a mass promotional to

The Josephson junction soma (Figure 15a), or JJ soma, proposed by Crotty et al. [106], is a circuit of two Josephson junctions connected in a loop which displays very similar dynamics to the HH model. The two junctions behave phenomenologically like the sodium and potassium channels, one allowing magnetic flux to charge up the loop and the other allowing flux to discharge from the loop. The circuit exhibits many features of biologically realistic neurons, including the evocation of action potentials “firing” in response to input stimuli, input strength thresholds below which no action potential is evoked, and refractory periods after “firing” during which it is difficult to initiate another action potential.

Refs. [105, 107, 108] discuss an improved version of such a soma, where the input “pore” is represented as an asymmetric interferometer (Figure 15b). The main advantage of the 3JJ neuron is that its mode of operation is easier to control. It has been shown that the 3JJ neuron has a much wider range of parameters in which switching between all operating modes (bursting, regular, dead, injury) is possible simply by controlling the bias current. Furthermore, the 3JJ neuron can be made controllable using identical Josephson junctions, and this design tolerates larger variations in the physical parameters of the circuit elements.

Each element of the nervous system can be represented by a similar behavior element of classical rapidSFQ (RSFQ) logic. Complete architecture and comparison with the biological archetype are shown in Figure 16. This circuit is driven by clocking regime in order to maintain stability when unforeseen side effects occur. One of the most compelling capabilities of superconducting electronics is its ability to support very high clock rates.

1. Soma – Josephson Comparator (JC) This is a clocked decision element that decides to let a single flux quantum pass in response to a current driven into source 1 in Figure 16. The decision function of the comparator is similar to the activation of a neuron in response to a current stimulus. Note that the Josephson comparator has been widely used as a nonlinear element in superconducting spiking neural networks.[110, 111]

2. Axon – Josephson Transmission Line (JTL) The transmission of the action potential originating from the Soma to adjacent neurons can be carried out by the Josephson Transmission Line (JTL). A magnetic flux quantum can move along the JTL with a short delay and low energy dissipation, and its passage through the Josephson junction is accompanied by the appearance of a spike voltage pulse on the heterostructures. This mechanism can be likened to the process observed in biological systems, where the myelin sheath covering axons enables the action potential to “hop” from one point to the next.

3. Synapse – Adaptive Josephson Transmission Line (AJTL) Synapses exert an inhibitory or excitatory influence on the postsynaptic neuron with respect to the past activity of the pre- and post-synaptic neuron. While memory is still difficult to implement in RSFQ technology, Feldhoff et al.[109] have designed a short-term adaptation element that sets a connection weight depending on the steady state activity of the pre- and post-synaptic neuron. A common design element in RSFQ circuits is a release junction to prevent congestion. A release junction is inserted into a JTL, making it controllable by two currents. As a result, the release probability of an RSFQ from the JTL-based superconducting quantum interference device (SQUID) can be controlled by the current flowing through the junction. By driving the pulse sequence of the pre- and post-synaptic neuron through an L-R low-pass filter, the current through the JJ output is a function of the pulse frequency and thus of the activity level of both neurons. Thermal noise adds uncertainty to the junction switching and flux release from the superconducting loop. This creates a continuous dependence of the SFQ transmission probability on the junction current balance. Recently Feldhoff et al. [112] have presented an improved version of the synapse where the single junction is replaced by a two junction SQUID, called a release SQUID. This allows the critical current to be controlled by coupling an external magnetic field into the release circuit. Since the flux can be stored in another SQUID loop, the critical current can be permanently adjusted, which changes the transmission probability of SFQs passing through the synapse. This makes the synaptic connection more transparent to the connected neurons. The realization of tunable synaptic interconnections is also possible by utilizing adjustable kinetic inductance.[113]

To enable parallel processing in a network, it is necessary to have sufficient capabilities for both input (fanin) and output (fan-out) in the technology platform. To address the issue of limited fan-in in JJ-based neuron designs, Karamuftuoglu et al. [114] introduced a high-fanin superconducting neuron. The neuron design includes multiple branches representing dendrites, with each branch placed between two JJs that set the threshold of the neuron (see Figure 17). This configuration allows for both positive and negative inductive coupling in each input data branch, supporting both excitatory and inhibitory synaptic data. The resistors on each branch create leaky behaviour in the neuron. A three hidden layer SNN using this neuron design achieved an accuracy of 97.07% on the modified NIST dataset. The network had a throughput of 8.92 GHz and consumed only 1.5 nJ per inference, including the energy required to cool the network to 4 K.[115]

Since memory storage is difficult to implement in RSFQ technology, magnetic Josephson junctions can be used to implement memory of past activity of pre- and post-synaptic neurons. In these devices, the wavefunction of Cooper pair extends into the ferromagnetic layer with a damped oscillatory behavior. Leveraging the physics of the interacting order parameters, Schneider et al. developed a new kind of synapse that utilizes a magnetic doped Josephson junction.[116] Inserting magnetic nanoparticles into the insulating barrier between two superconductors allows for the adjustment of the Josephson junction’s critical current. Since numerous particles can be placed within the same barrier, each aligned in various directions, the critical current can effectively vary across a continuous range of values, making magnetic Josephson junctions an ideal memory element for the synaptic strength.

The memristive Josephson junctions (MRJJ) can also serve as a neuron-inspired device for neuromorphic computing. The paper by Wu et al. [117] investigates the dynamic properties of neuron-like spiking, excitability and bursting in the memristive Josephson junction and its improved version (the inductive memristive Josephson junction, L-MRJJ). Equivalent circuits of MRJJ and LMRJJ are shown in Figure 18a and Figure 18b respectively. The MRJJ model is able to reproduce the spiking dynamics of the FitzHugh-Nagumo neuron (FHN model). Unlike the FHN model, the MRJJ model is bistable. The two class excitabilities (class I and class II) in the Morris-Lecar neuron are reproduced by the MRJJ model based on the frequency-current curve. The L-MRJJ oscillator exhibits bursting modes analogous to the neuronal busting of the 3-D Hindmarsh-Rose (HR) model in terms of purely dynamical behaviour, but there is a discrepancy between the two models. The generating origin of the bursting patterns depends on the saddle-node and homoclinic bifurcation using a fast-slow decomposition method. The L-MRJJ model has infinite equilibria. The coupled LMRJJ oscillators can achieve both in-phase and antiphase burst synchronisation, similar to the behaviour of coupled Hindmarsh-Rose neurons. During burst synchronisation, the L-MRJJ network is partially synchronised, but the HR network is fully synchronised.

Adjusting neuron threshold values in spiking neural networks is important for optimizing network performance and accuracy, as this adjustment allows for fine-tuning the network’s behavior to specific input patterns. Ucpinar et al. [115] proposed a novel on-chip trainable neuron design, where the threshold values of the neurons can be adjusted individually for specific applications or during training.

4.2. BrainFreeze

Combining digital and analogue concepts in mixed-signal spiking neuromorphic architectures offers the advantages of both types of circuits while mitigating some of their disadvantages. Tschirhart et al. [118] proposed a novel mixed-signal neuromorphic design based on superconducting electronics (SCE) – BrainFreeze. This novel architecture integrates bio-inspired analogue neural circuits with established digital technology to enable scalability and programmability not achievable in other superconducting approaches.

The digital components in BrainFreeze support timemultiplexing, programmable synapse weights and programmable neuron connections, enhancing the effectiveness of the hardware. The architecture’s time-multiplexing capability enables multiple neurons within the simulated network to sequentially utilize some of the same physical components, such as the pipelined digital accumulator, thereby enhancing the hardware’s effective density. Communication among neurons within BrainFreeze is facilitated through a digital network, akin to those employed in other large-scale neuromorphic frameworks. This digital network allows the sharing of wires connecting neuron cores across multiple simulated neurons, eliminating the necessity for dedicated physical wires to link each pair of neurons and significantly improves scalability. The flexible connectivity offered by the digital network also allows to implement various neural network structures by adjusting the routing tables within the network. By employing this approach, BrainFreeze leverages recent progress in SCE digital logic and insights gleaned from large-scale semiconductor neuromorphic architecture.

In its fundamental configuration, the BrainFreeze architecture consists of seven primary elements: control circuitry, a network interface, a spike buffer, a synapse weight memory, an accumulator, a digital-to-analogue converter, and at least one analogue soma circuit. A schematic representation illustrating the overall architecture is provided in Figure 19. The authors refer to one instance of this architecture as a Neuron Core. This architectural framework merges the scalability and programmability features allowed by superconducting digital logic with the biological suggestivity functionalities enabled by superconducting analogue circuits.

Tschirhart et al. [118] provided a comparison of stateof-art neuromorphic architectures based on CMOS such as TrueNorth, SpiNNaker, BrainScale, Neurogrid and Loihi to BrainFreeze in order to demonstrate the potential of the proposed architecture (see Figure 20). In conclusion, the findings indicate that employing a mixedsignal SCE neuromorphic approach has the potential to enhance performance in terms of speed, energy efficiency, and model intricacy compared to the current state of the art.

4.3. Superconducting nanowire-based and phase-slip-based realisations

For a large neuromorphic network, the number of SFQ pulses generated should be high enough to drive a large fan-out. In this case, the JJ is limited in the number of SFQ pulses it can generate. Therefore, it may be very difficult to implement a complete neuromorphic network based solely on the JJ. Schneider et al. [119] have theoretically reported a fan-out of 1 to 10000 and a fan-in of 100 to 1. An approximate estimate of the power dissipated for a 1-to-128 flux-based fan-out circuit for a given critical current value is reported to be 44 aJ. Additionally, the action potentials in JJ are not sufficiently strong to be easily detectable. An alternative to JJ could be a thin superconducting wire, also known as a superconducting nanowire (SNW). The intrinsic non-linearity exhibited by superconducting nanowires positions them as promising candidates for the hardware generation of spiking behavior. When a bias current flowing through a superconducting nanowire exceeds a threshold known as the critical current, the superconductivity breaks down and the nanowire becomes resistive, generating a voltage. The nanowire switches back to the superconducting state only when the bias current is reduced below the retrapping current and the resistive part (the “hotspot”) cools down. Placing the nanowire in parallel with a shunt resistor initiates electrothermal feedback, resulting in relaxation oscillations.[120] SNWs demonstrate reliable switching from superconducting to resistive states and have shown the capability to produce a higher number of SFQ pulses as output. Toomey et al. [121, 122] have proposed a nanowirebased neuron circuit that is topologically equivalent to the JJ-based bio-inspired neurons discussed above.

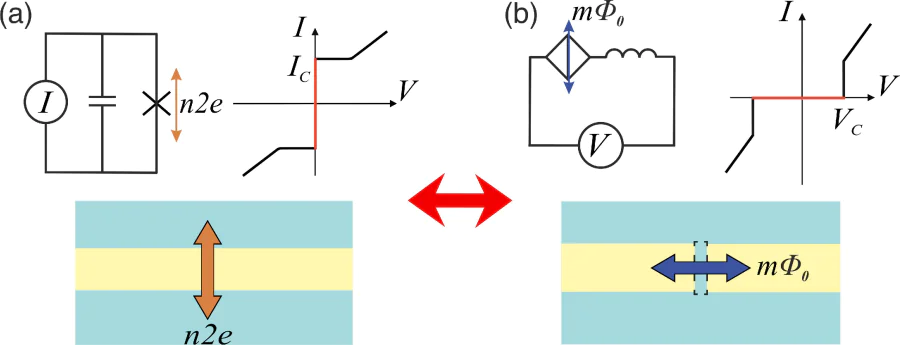

Quantum phase slip can be described as the exactly dual process to the Josephson effect based on charge-flux duality (Figure 21). In a quantum phase-slip junction (QPSJ), a magnetic flux quantum tunnels across a superconducting nanowire along with Cooper pair transport and generate a corresponding voltage across the junction.[123, 124] Unlike Josephson junctions and superconducting nanowires, QPSJs do not require a constant current bias. However, the “flux-tunneling” together with voltage spike generation in such systems can be used to implement neuropodic systems on a par with Josephson junctions.

Cheng et al. [125] introduced a theoretical QPSJbased spiking neuron design in 2018 (Figure 22). When an input QPSJ fires, it charges a capacitor, building a potential that eventually exceeds the threshold of the output QPSJs connected in parallel. A resistor can be added to maintain the same bias across all QPSJs. This behaviour is consistent with the leaky integrate-and-fire neuron model. The total “firing” energy for this circuit design is given by the switching energy of the QPSJ multiplied by the number of QPSJs required for “firing”, and is estimated to be on the order of 10−21 J, compared to about 0.33 aJ (per switching event) for a typical JJ-based neuron.

5. Bio-inspired elements for optical neuromorphic systems

Another alternative neuromorphic solution is networks with physical realisation of spikes in the form of electromagnetic field packets. The advantage of such systems as candidates for hardware implementation of optical neural networks (ONN) is also their speed of information transmission, which can be carried out at frequencies exceeding 100 GHz (even at room temperature!),[126] as well as the ability to create distributed networks with a high degree of connectivity.[127] High bandwidth is important for practical applications such as those based on the control of hypersonic aircraft or related to the processing of radio signals. The first ONN solutions are based on silicon photonics, which is well compatible with CMOS technology, for example based on Mach-Zender interferometers[128] and micro-ring resonators.[129]